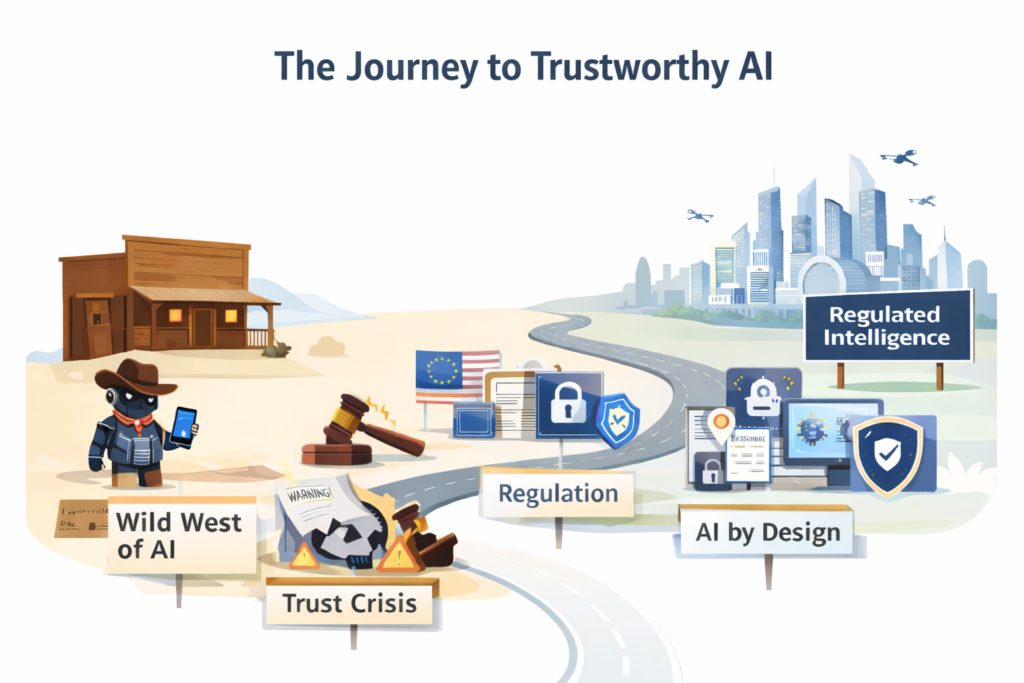

Trustworthy AI: From Wild West to Regulated Intelligence

In 2016, Microsoft’s chatbot Tay was taken offline within 24 hours after it began posting offensive content on social media. It became one of the earliest mainstream reminders that AI, left unchecked, can go wrong very quickly. Fast forward to today, and industry leaders like Sam Altman openly state that advanced AI systems must be aligned, governed, and deployed responsibly to avoid serious societal risks.

That shift tells a larger story. Trustworthy AI did not emerge as a branding exercise. It evolved through failure, regulation, and commercial pressure. What began as experimental innovation now resembles regulated intelligence.

This is the journey from the Wild West of AI to a world where AI privacy and security shape product strategy.

First stop: The wild west of AI

The early wave of modern AI prioritised speed and disruption. Start-ups launched models rapidly. Big technology firms raced to demonstrate breakthroughs. Venture capital rewarded scale over scrutiny.

Few people spoke about AI data privacy. Fewer asked about model explainability. Governance rarely featured in launch announcements. Developers focused on performance metrics. Accuracy, speed, and automation dominated product messaging. The assumption was simple: if the system works, it ships. But intelligence without guardrails proved risky.

Second stop: The moment trust fractured

Machine learning failure examples began appearing more frequently. Microsoft’s Tay incident revealed how models could be manipulated. Biased hiring algorithms drew criticism for replicating historical discrimination. Predictive policing tools faced backlash for reinforcing systemic inequalities.

Generative AI added another layer of concern. Early large language models produced misinformation and fabricated references. Enterprises hesitated to deploy systems without verification controls.

Public confidence weakened. Regulators took notice. AI privacy and security moved from niche concern to a headline topic. Trust was no longer automatic.

Third stop: The regulatory awakening

Governments responded with structure. The European Union introduced the AI Act, categorising systems by risk level. The UK adopted a sector-led regulatory framework. The United States increased oversight through federal agency guidance.

The message was clear: advanced systems require accountability.

Subscribe to our bi-weekly newsletter

Get the latest trends, insights, and strategies delivered straight to your inbox.

Enterprise boards recognised the implications quickly. Risk committees asked tougher questions. Procurement teams expanded security questionnaires. Vendors now needed to demonstrate AI security best practices, not merely technical brilliance. Trustworthy AI began forming as a compliance reality rather than an abstract principle.

Fourth stop: AI by design replaces AI afterthought

Retrofitting governance proved expensive. Organisations discovered that patching privacy controls after launch creates technical debt. Legal exposure rises when documentation is incomplete. Brand damage lingers long after a public failure.

The response was structural change. AI by design became an operational principle. Privacy by Design AI frameworks entered development pipelines. Teams embedded encryption, access controls, and bias testing early in model training.

On-device AI processing gained traction as a practical solution. By analysing data locally, companies reduced exposure to cloud-based breaches. Smartphones began handling voice recognition and image categorisation without constant data uploads. Secure AI systems moved from backend infrastructure to visible architecture.

Trust started moving upstream in the lifecycle.

Fifth stop: Trust becomes visible

Something subtle changed in product messaging. Companies stopped hiding governance in legal documentation. They began highlighting AI trust and transparency features publicly. Transparency reports became routine. Responsible AI guidelines were published openly. Model cards documented training data and limitations.

Trust-centric product design made governance tangible. Privacy dashboards appeared in enterprise interfaces. Audit logs became accessible. Explainability tools allowed users to understand model reasoning. Trustworthy AI shifted from invisible safeguard to competitive signal. Buyers could now see discipline.

Sixth stop: Enterprise procurement redraws the map

Enterprise buyers redefined market incentives. Procurement teams demanded architecture diagrams and security certifications. They evaluated data provenance, encryption standards, and monitoring systems before approving contracts. AI privacy and security became deal-shaping factors.

Trustworthy AI shortened sales cycles when documentation was ready. Vendors that demonstrated secure AI systems reduced friction during compliance review. Legal teams felt reassured when AI trust and transparency mechanisms were embedded. Trust became commercial leverage. What began as risk mitigation evolved into premium positioning.

Seventh stop: Ethical AI development as market currency

Ethical AI development matured beyond bias statements. Human-centered AI design gained importance. Systems needed to respect dignity, fairness, and accountability. Companies established internal ethics boards and cross-functional governance teams.

Investors also shifted their lens and began examining governance maturity during due diligence. Machine learning failure examples have demonstrated how quickly valuation can erode following a crisis.

Secure AI systems reduce regulatory fines and litigation risk. They signal operational maturity. They support long-term resilience. Trustworthy AI became part of financial strategy.

Destination: Regulated intelligence

Today’s AI environment looks fundamentally different from the early Wild West stage. Sam Altman has repeatedly emphasised the need for AI systems to be aligned with human values and subject to oversight. Leaders across the industry echo similar concerns about safety, governance, and responsible deployment.

The tone has shifted from disruption to responsibility.

AI privacy and security now appear in product roadmaps. AI security best practices shape engineering standards. Trust-centric product design influences enterprise positioning. Performance still matters. Speed still sells. But intelligence without trust now feels incomplete.

The road ahead: Trust as default architecture

The next chapter of AI development will likely normalise Trustworthy AI as a baseline expectation. Buyers will assume encryption exists. They will expect explainability. They will demand human oversight for high-risk systems. On-device AI processing may expand further. Edge computing could reduce centralised vulnerabilities. Regulatory harmonisation may create clearer global standards.

In this environment, competitive differentiation will depend on how deeply organisations embed AI by design principles. Trust will not be optional. It will be infrastructure.

Distilled

From frontier experimentation to disciplined innovation

The journey from Wild West experimentation to regulated intelligence was not smooth. It was shaped by failure, scrutiny, and public debate. Trustworthy AI emerged through correction. Early systems demonstrated capability without control. Public incidents revealed the cost of oversight gaps. Regulation formalised expectations. Enterprises recalibrated incentives.

Now, AI privacy and security shape buying decisions. Ethical AI development influences brand value. Secure AI systems strengthen enterprise confidence. Trustworthy AI has become more than compliance. It represents disciplined innovation. The Wild West rewarded speed. Regulated intelligence rewards responsibility. And in today’s enterprise market, responsibility increasingly defines what premium truly means.