AI Safety Through a Gender Lens—Why it Matters Now

Artificial intelligence (AI) promises extraordinary benefits — but it also introduces complex risks. As governments and industry leaders work to define the rules, AI safety has become a global priority. Yet, most of the voices shaping these frameworks are still men. When gender enters the conversation, the perspective widens — the discussion becomes fairer, more inclusive, and more grounded in lived realities.

This piece explores how female researchers and policy advocates are influencing AI safety — from the EU AI Act to emerging US and UK safety institutes — and why gender diversity remains central to building responsible AI systems.

Why gender matters in AI safety?

Before we talk about policy or frameworks, we have to talk about people — and the blind spots that still define how AI sees us.

Bias in AI isn’t hypothetical. When systems are trained on incomplete or skewed data, they inevitably reflect society’s existing inequalities — often amplifying them. In hiring tools, health diagnostics, and predictive policing, AI systems have shown gendered errors.

Consider healthcare AI tools that misdiagnose women because most training data is male-centric. Or hiring algorithms that downgrade female candidates because they’re trained on historical patterns of male success. When women aren’t at the table designing, auditing, or governing these systems, their realities and risks are filtered out.

If AI safety frameworks ignore gender, they risk entrenching inequalities. A system might pass a fairness test overall yet still discriminate against women or marginalised groups. Feminist and intersectional scholars argue that AI ethics must always consider power, identity, and context, especially when shaping AI policy and regulation.

Women influencing AI policy and regulation globally

Across continents, women are rewriting what safety in AI even means; not through slogans, but through laws, institutions, and persistent advocacy.

European Union and the AI Act

The EU AI Act — one of the world’s first attempts at a comprehensive AI regulation — has drawn significant critique for its gender neutrality. On paper, the Act categorizes risk levels and mandates accountability. But in practice, it assumes a “generic” user and overlooks how technology affects individuals differently across gender, race, and class.

Report such as The EU Artificial Intelligence Act Through a Gender Lens have exposed these blind spots. Female legal scholars have called for the inclusion of mandatory gender audits, intersectional risk assessments, and stronger enforcement mechanisms. They argue that regulation cannot claim fairness if it does not see gender as a variable that shapes outcomes.

Their advocacy is slowly reshaping the conversation in Brussels, pushing the Act beyond its technical language into a more human-centered frame — one that acknowledges inequality as a safety issue.

United States: AI safety institutes and ethics centres

In the US, AI safety is rising on the policy agenda through new institutions and funding programmes. Female researchers are bringing critical perspectives into those spaces.

For example, Katherine Elkins, a humanities and ethics researcher, became a principal investigator for the US AI Safety Institute under NIST, helping ensure safety research includes humanistic and social angles. Similarly, women leading AI ethics centres and university labs are pushing for more inclusive governance models, demanding that ethics be built into design from the start.

In the UK, Jade Leung left a high-paying corporate AI role to join the UK’s AI Safety Institute (AISI) as CTO. She advocates for a public-interest institution to steer AI policy away from narrow corporate agendas. Her move shows how women are stepping into crucial roles in AI safety institutes, shaping how we test, monitor, and regulate powerful models.

Advocates, networks, and civil society

Outside the walls of government, another kind of leadership is taking root — one that’s community-built and unapologetically vocal.

Beyond policy tables, women in civil society are driving grassroots accountability. Women in AI Ethics, for instance, works to counter the exclusion of women from AI debates. Women4Ethical AI, under UNESCO, highlights how gender imbalance in AI development perpetuates discrimination and calls for inclusive design and regulation.

Feminist tech justice advocates such as Safiya Noble use research to expose how algorithms embed structural bias. They call for stronger guardrails, accountability, and remedies for harm. These actors also promote better AI policy templates that include gender impact assessments, stakeholder engagement with women’s organisations, and intersectional risk evaluation.

How women are reshaping the AI safety debate

The difference isn’t just in who’s at the table, it’s in what gets discussed once they’re there. Women’s voices are changing both the substance and the process of AI safety.

1. Agenda setting and framing: Women bring different priorities to the AI safety agenda. Instead of abstract debates about alignment or existential risk, female experts highlight immediate harms, bias in healthcare, domestic violence risks from surveillance, misuse of deepfakes, and digital violence against women. They urge that AI regulation include gendered risk categories and require fundamental rights assessments that consider gender-based harms.

2. Methodologies and interdisciplinarity: Female scholars bridge disciplines, law, sociology, feminist studies, and computer science, making AI safety less technocratic and more socially aware. Their work promotes gender mainstreaming in AI policy, ensuring every governance measure carries a gender perspective. They also pioneer methods like participatory design, counterfactual auditing, intersectional impact metrics, and bias stress-testing for gendered data.

3. Institutional reform and capacity building: Women in AI safety stress that enforcement bodies, such as equality commissions and regulators, must have the authority and resources to detect and penalise gendered algorithmic harm. They also advocate for AI safety institutes to include fairness teams, gender auditors, and diverse governance boards. Leaders like Jade Leung and Katherine Elkins show how women can guide resource allocation, model evaluation priorities, and oversight frameworks more equitably.

4. Mobilisation and social legitimacy: Women activists bring credibility and moral urgency to AI ethics debates. When communities see AI as gender-blind or unsafe for women, these advocates push policymakers to act. Their presence ensures AI policy remains socially responsive, not just expert-driven.

Challenges and limits to influence

Progress is happening, but it’s far from simple. Women in AI safety still face barriers that don’t fall overnight.

- Representation gaps: Leadership in AI remains mostly male. Many female researchers publish less and struggle for recognition or collaboration.

- Tokenism: Women are invited into rooms where real decisions still lie elsewhere.

- Structural barriers: Academia and tech industries move slowly on gender balance. Funding systems rarely close the gap.

- Resistance: Some industry voices see gender safeguards as obstacles to innovation. Others don’t see why they matter.

- Complexity: Turning feminist ideas into practical law or audits isn’t easy. The details are messy and require persistence.

Even so, change is visible. Women are shaping the EU AI Act, joining advisory boards, and holding companies accountable. Their persistence keeps gender firmly part of the AI safety conversation where it belongs.

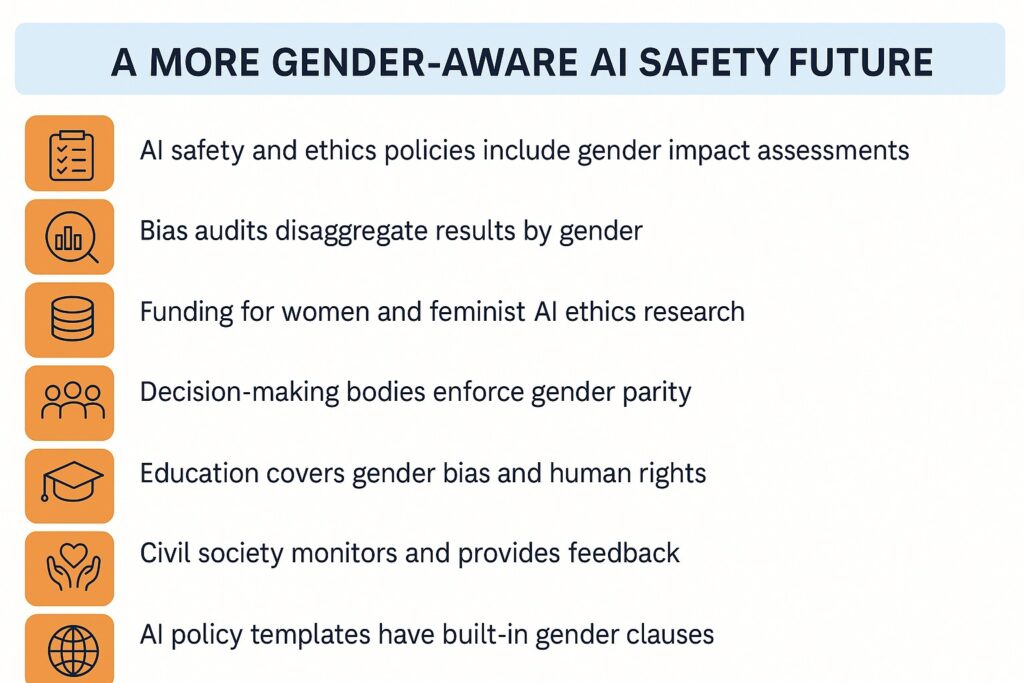

What a more gender-aware AI safety future might look like

To embed these gains into lasting practice, here’s what the future of AI safety could include:

Distilled

AI safety isn’t only about systems or rules; it’s about who gets heard while shaping them. When more women and gender-diverse people take part, the conversation shifts. It feels less distant, more human. Their presence brings questions others might overlook, about fairness, care, and trust. That’s when technology starts working for everyone, not just the few who built it.