AI Content Detection Tools: Fair or Flawed?

As generative AI continues to grow, a new kind of digital referee is stepping into the spotlight: AI content detection tools. These tools, like GPTZero and Originality.AI, claim to identify text written by AI models.

They are becoming increasingly common in both education and enterprise settings. But are they accurate and fair, or are we giving flawed gatekeepers too much power?

Let’s take a closer look.

Why are AI content detection tools needed?

The rise of ChatGPT and similar models has changed how people write, learn and work. Students can use AI to draft essays. Employees can lean on AI to create reports or emails. While this boosts productivity, it raises serious questions about originality and ethics.

AI Detectors in Education aim to protect academic integrity. Universities and schools want to ensure students submit their work. AI Detectors in Enterprise check if content, especially sensitive reports or pitches, is genuinely human-made.

In both spaces, the goal is the same: maintain trust. But that’s where the debate begins.

How do these tools work?

AI content detection tools work by analysing the structure and style of writing. They don’t read or understand meaning like humans do. Instead, they scan for patterns that often reveal whether a piece was written by a person or an AI.

Human writing tends to be unpredictable, full of quirks, tone shifts, and uneven phrasing. In contrast, AI-generated content is usually more polished, confident, and stylistically consistent. Detection tools use these differences to assign a likelihood score.

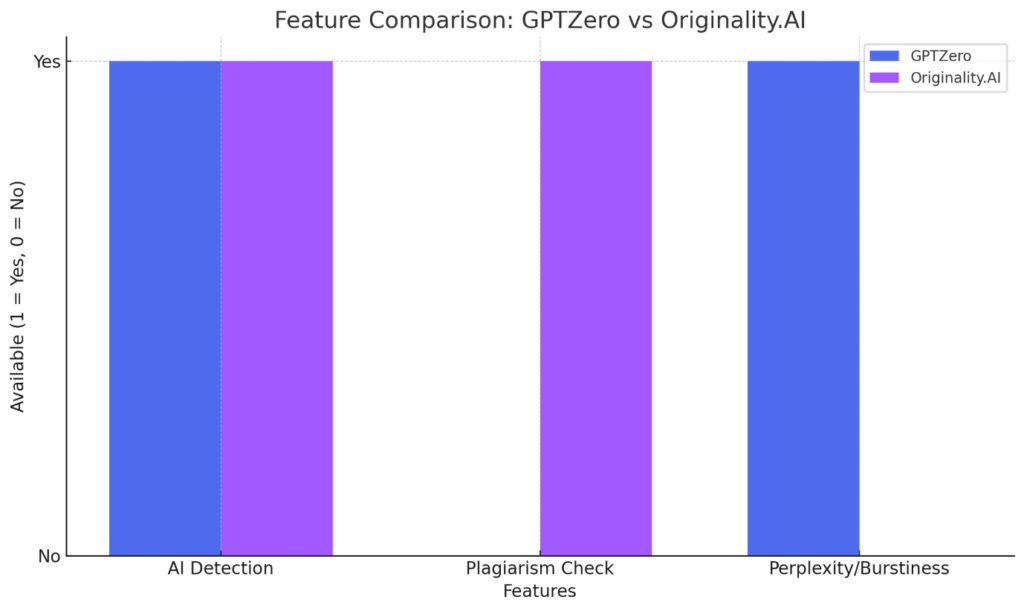

Two of the most well-known tools in this space are GPTZero and Originality.AI, each serving slightly different audiences.

GPTZero, which gained popularity in academic circles, evaluates text based on two key metrics: perplexity (how complex the language is) and burstiness (how much variation exists between sentences). Human writing typically scores higher in both, while AI writing tends to be flatter and more uniform.

Originality.AI, on the other hand, is aimed at enterprise users, content creators, and SEO professionals. It provides a percentage-based assessment of how likely content is AI-generated and also includes a built-in plagiarism checker, something GPTZero does not offer.

Subscribe to our bi-weekly newsletter

Get the latest trends, insights, and strategies delivered straight to your inbox.

To illustrate their features at a glance:

These tools offer valuable insights, but they aren’t foolproof. Their results should support human judgment, not replace it. Whether in classrooms or corporate settings, they act best as content flags, not final verdicts.

Are they accurate?

This is where things get tricky. AI detection accuracy is far from perfect, and even the most advanced tools are prone to errors. These inaccuracies can lead to serious consequences in both education and the workplace.

Here’s what you need to know:

- False positives: Human-written content, especially from ESL (English as a Second Language) students, is often flagged as AI-generated. A University of Cambridge study highlighted this bias, raising fairness concerns.

- False negatives: Some AI-generated content can pass undetected as human-written. This means users may bypass detection by slightly editing or rephrasing AI output.

- Impact on students: Incorrect flags in academic settings may lead to disciplinary action, damage a student’s academic record, or create a culture of fear and mistrust.

- Risks at work: In enterprise settings, false positives could affect appraisals, trigger HR reviews, or undermine employee trust, especially in content-heavy roles like marketing or communications.

- Lack of context: Tools like GPTZero and Originality.AI don’t account for context, such as whether AI was used for ideation or whole content generation, leading to potentially unfair conclusions.

AI content detection tools are useful, but they’re not flawless. Treat their results as signals, not verdicts.

Ethical concerns and legal risks

The use of AI content detection tools isn’t just about accuracy. It also opens up ethical and legal challenges. In classrooms, students may feel watched or mistrusted. Some argue that using AI is part of modern learning and shouldn’t always be penalised. Others fear it may widen the gap for students who rely on AI due to language or learning barriers. In the workplace, AI detectors in enterprise risk breaching employee trust. Quietly running detection on emails or reports can feel invasive. There are also GDPR and data protection issues if content is analysed without proper consent.

The human element: Are we still the judge?

Despite the rise of detection tools, human judgment remains essential. No tool can fully understand tone, intention, or context the way people can. Most experts agree: AI-generated content detection tools should guide decisions, not make them.

Take this example: a report is flagged as 70% AI-generated. That doesn’t prove dishonesty. It simply raises a flag that should be looked at more closely. Was AI used to draft ideas? Or was it relied upon to do all the thinking?

Context matters, and intent matters even more. In classrooms, this could mean speaking to a student rather than penalising them immediately. In the workplace, it might involve asking clarifying questions before jumping to conclusions. Teachers and managers must treat AI scores as signals, not verdicts. Otherwise, we risk punishing creativity, curiosity, or just misunderstanding.

A shift in mindset: Regulate or embrace?

Rather than fighting AI, many are now finding ways to work with it. Educators are moving from restriction to responsible guidance. Some schools now encourage students to use AI tools for planning, idea generation, or editing. This builds digital literacy while keeping academic honesty intact.

In business, AI use is becoming standard. Leaders are now drafting clear internal policies. These rules define where AI use is allowed, when detection tools may be used, and what “acceptable use” looks like. The goal isn’t to ban AI; it’s to ensure it supports human effort and does not replace it.

This mindset shift from banning to guiding is key to using AI responsibly. Without clear boundaries, even well-meaning AI use can cause issues. However, organisations can turn AI from a threat into a tool with transparency and training.

Are these tools here to stay?

Yes, and they’re just getting started. As generative AI improves, it’s becoming harder to detect. Some tools already struggle with advanced outputs or heavily edited content. Experts predict that future AI could be nearly indistinguishable from human writing. This means detection tools will need to evolve constantly to keep up.

Despite their limits today, AI content detection tools are now part of our digital infrastructure. Startups, researchers, and big tech firms are pouring resources into refining these tools. Expect to see smarter detectors embedded into platforms like Google Docs, Microsoft Word, email clients, and even learning management systems.

Their role will expand. But so will the need for ethical oversight and human input. Because even the smartest tool can’t replace thoughtful judgement.

Distilled

Whether in a lecture hall or a boardroom, AI content detection tools are becoming digital gatekeepers. They help ensure originality but also risk overreach. Educators, employers, and developers must work together to strike the right balance. It’s not about punishing AI use, it’s about promoting honest, informed, and fair practices.

When used wisely, AI detectors in education and AI detectors in enterprise can help maintain standards. But when used blindly, they may do more harm than good. As AI continues to shape our world, one thing is clear: the tools we build to govern it must be as thoughtful as the technology itself.